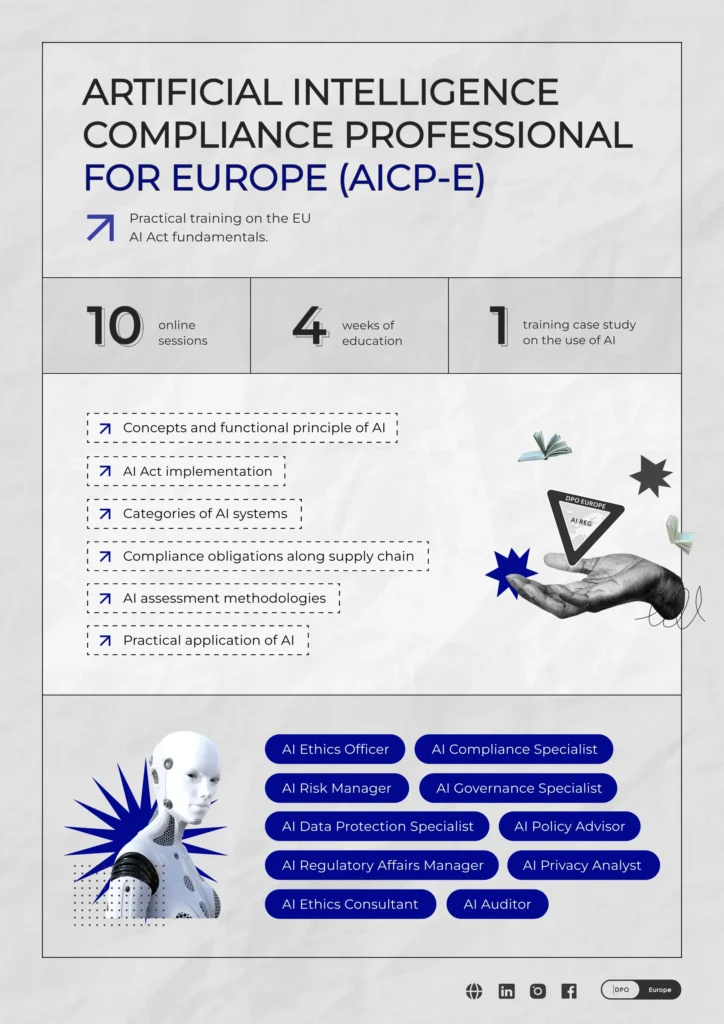

Practical training on the EU AI Act fundamentals and AI key aspects. Learn how to use AI systems safely for personal data.

- August 18 → September 17

- 10 classes

- Theory, practical tasks in groups, tests

- Electronic certificate via Accredible

- €1050 + VAT

The course is based on the requirements of the EU AI Act

This training will enhance your understanding of new AI-specific rules and offer fresh insights into existing frameworks. You’ll gain knowledge and practice skills to navigate the AI landscape effectively, along with a clear explanation of the requirements and reasons for AI compliance.

Achieving AI compliance requires in-depth knowledge across multiple domains. While traditional tools used by privacy lawyers and Data Protection Officers (DPOs) remain relevant, they need refinement and recalibration for this high-risk field.

Program

Foundations of AI

- You will learn common terms, concepts and definitions: i.e., AI, AI system, AI model, General Purpose AI model, Artificial General Purpose Intelligence, Weak AI or Symbolic AI, Deep Learning, Machine Learning, Neural Network, Large Language Model;

- The problem of the definition of AI system in the EU AI Act;

- You will learn the downside of the the EU AI Act AI systems definition.

Historical development of AI

- You will learn about the different waves of AI development: first, second and third wave;

- You will learn about the problems of regulating AI.

Introduction to the European Union AI Act

- History brief overview;

- Purposes;

- Structure;

- Terminology;

- Players (Operators): Roles and Subjects: Provider, Importer, Distributor, Deployer.

Scope of application

- Material Scope (including meaning of AI System and AI Model);

- Exceptions from material scope;

- Territorial Scope;

- AI Act Self-Assessment.

Risk-level Approach and legal regimes of the AIA

- Prohibited AI Practices;

- Transparency obligations for providers and deployers of certain AI systems;

- Minimum Risk AI Systems;

- AI Risk Assessment.

High Risk AI Systems

- Classification of AI systems as high-risk;

- AI Systems with Limited Risk;

- Risk Management System and Quality Management System;

- Data and Data Governance;

- Technical Documentation and Documentation Keeping;

- Record Keeping and Automatically Generated Logs;

- Transparency and Information Provisions;

- Human Oversight, Accuracy, Robustness and Cybersecurity;

- Conformity Assessment and EU Declaration of Conformity;

- Fundamental Rights Impact Assessment;

- EU database for high-risk AI systems;

- Post-market monitoring by providers and post-market monitoring plan for high-risk AI systems;

- Sharing of information on serious incidents and Corrective actions and duty of information;

- Cooperation with competent authorities and Authorised representatives of providers of high-risk AI systems.

General Purposes AI Models

- Concept of General Purpose AI Model;

- Authorised representatives of providers of general-purpose AI models;

- Defining of Systemic Risk;

- General Purpose AI Models without Systemic Risk;

- General Purpose AI Model with Systemic Risk;

- Mutual assistance, market surveillance and control of general-purpose AI systems.

Measures for supporting Innovation

- AI Regulatory sandboxes;

- Measures for providers and deployers, in particular SMEs, including start-ups;

- Derogations for specific operators.

Governance, Liability and Enforcement

- Governance on the EU Level: AI Office, European AI Board, Advisory Forum, Specific Panel of Independent Experts, Testing Support Structures;

- Domestic Governance: Market Surveillance Authorities, Notifying Authorities, Notification Bodies; other authorities (Authorities Protecting Fundamental Rights, Data Protection Authorities, Courts);

- Market surveillance and control of AI systems in the Union market;

- Administrative Liability under the AI Act: categories of fines and enforcement mechanism;

- Remedies and right enforcement procedure.

Data Governance and Personal Data in the AI&ML Lifecycle

- When Data Protection legislation applies to AI&ML Lifecycle;

- Data Protection Principles and AI Challenges;

- Purpose Limitation and Personal Data Reuse: Compatibility Assessment, data reuse by processor;

- Legal Grounds for Personal Data Processing: Legitimate Interest Problem and Pitfalls of Consent;

- AI and Transparency Principle;

- AI and Data Processing Impact Assessment;

- AI and Automated Decision Making;

- AI and Privacy by Design and by Default;

- AI and Personal Data of Special Categories.

Course Format

- 4 weeks / 1 050 EUR

- 10 classes

- Group work with practical tasks

- Self-assessment tests

- In-depth work on AI use case with trainers

- Collaborative tools: Miro and Notion

By the end of this course, you will be able to:

- Confidently determine whether your tools meet the legal definition of an AI system under the EU AI Act.

- Assess the applicability of the AI Act to your specific use cases and operational framework.

- Evaluate the risk level of your AI system and develop a comprehensive risk analysis.

- Identify your role as an AI operator and understand the corresponding legal obligations.

- Adjust and optimize your data protection management program in alignment with AI Act requirements.

- Refine your DPIA and Fundamental Rights Impact Assessment to ensure compliance.

- Conduct conformity assessments, enabling you to bring your AI systems and tools to market with confidence.

Learn from professionals

Schedule

August 18 → September 17

Choose date

Why Is This Course Your Best Choice?

Hands-on learning

Engage in practical exercises and tests that reflect real-world scenarios. Moreover, you’ll work on a practical case with the trainers that reflect the current challenges in AI regulation.

Certified trainers

Learn from industry experts with internationally acknowledged certifications (AIGP, FIP, CIPP/E, CIPP/US, CIPM) and solid professional experience in AI and data protection.

Comprehensive coverage

From key concepts to enforcement strategies, this training covers everything you need to become proficient in AI compliance and have a deep understanding of EU AI Act.

Structured training materials

Author boards in Miro and 20+ useful materials: guidelines, diagrams, checklists, regulatory documents.

Connecting with like-minded people and networking

Collaboration with people who share your interests motivates and helps achieve better results. In such an environment, you can easily discuss ideas, share latests news and cases, ask questions, and receive feedback.

Guarantees

Money-Back

If you find that the course isn’t the right fit for you, we’ll refund the full cost.

Data Security

Your privacy is our priority. We ensure your data is securely protected, not shared with third parties, and used in marketing campaigns with your consent only.

Post-Learning

Support Your learning doesn’t end with the training. You’ll have access to the chat, where our trainers are ready to answer your questions relevant to the course.

Special Offer

50% Off for Full-Time Students

We believe in supporting students in their professional growth. That’s why we offer a 50% discount to all full-time students on our fundamental trainings: GDPR Data Privacy Professional and Global Data Privacy Manager.

Please note: A valid student ID is required when enrolling.

Feedback

Bonus materials

Get a special offer

Fill out the form and we will contact you as soon as possible!